Late in July, President Donald Trump used his Twitter-powered megaphone to muse about postponing the Nov. 3 election, citing alleged — and widely debunked — concerns about mail-in voter fraud.

Despite widespread efforts to fact-check the president — and make clear he lacks the power to delay the election — Trump’s voter fraud myth was repeated in Wisconsin. In a July 30 Twitter post, NBC 15 in Madison ran the tweet verbatim, asking viewers’ opinions of the message but failing to provide context about the harmful falsehood.

Several commenters expressed alarm at the post.

News with a little more humanity

WPR’s “Wisconsin Today” newsletter keeps you connected to the state you love without feeling overwhelmed. No paywall. No agenda. No corporate filter.

“Not only is it not a good idea, it is an illegal action, and your headline is exceedingly irresponsible journalism,” one user wrote.

The station did not respond to requests for comment.

The episode shows how disinformation — content that is intended to deceive — can spread on social media even after being widely exposed as false. It also demonstrates how national influencers with vast followings wield extraordinary power to distort reality. And it shows how everyone is responsible for spreading or containing false information that circulates online — and poses a threat to democracy.

The president’s words come as multiple forces threaten to disrupt the 2020 presidential election. A top national security official on Aug. 7 reported that Russia, China and Iran were attempting to “sway U.S. voters’ preferences and perspectives, shift U.S. policies, increase discord in the United States and undermine the American people’s confidence in our democratic process.”

Democratic House Speaker Nancy Pelosi told reporters that Russian interference aimed at boosting Trump’s re-election far outweighs efforts by the other two countries.

Wisconsin in the crosshairs

Disinformation can be carefully targeted to locations and demographics where it is likely to have the biggest impact. Wisconsin received a disproportionate amount of targeted disinformation from Russian actors in 2016 because of its status as a swing state, according to research led by Young Mie Kim, a journalism professor at the University of Wisconsin-Madison and an affiliated scholar at the Brennan Center for Justice, a nonpartisan law and public policy center.

Wisconsin Watch and First Draft, a leading resource on the spread of disinformation, identified multiple instances in which disinformation with murky origins appeared to target Wisconsinites.

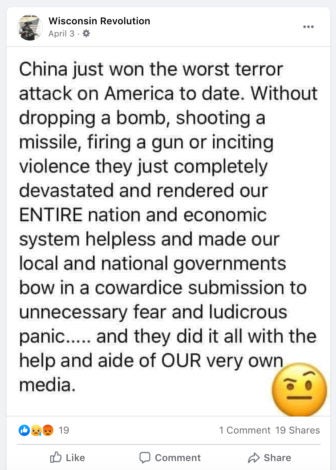

The Facebook page Wisconsin Revolution, for example, uses stock images for both profile and cover photos and posts divisive, sometimes xenophobic content. This spring, posts suggested a connection between China, the U.S. media, the “establishment” and the spread of the novel coronavirus. The page was inactive for all of 2018 and most of 2019, but posted a large volume of anti-Hillary Clinton content in the run-up to the 2016 election and has stepped up pro-Trump and anti-Gov. Tony Evers content in mid-April.

Fake accounts known as bots can also amplify divisive content. Some of that content is produced by people who traffic in inflammatory content, including extreme partisans. The effect is that these voices appear to be shouting louder and gaining more traction than they would with purely human audiences.

One account identified by Wisconsin Watch appears to be a real person with bot-like tendencies. The account, @Carol_Peaslee, tweets with remarkable frequency: nearly 60 times a day, on average, between mid-June and early August, with most activity occurring overnight and during early morning hours.

The account posts a large volume of conservative propaganda and conspiracy theories about COVID-19 treatments and Evers’ response to the pandemic, among other subjects. Some of the account’s followers are likely bots, although most appear to be real people, a Wisconsin Watch analysis shows.

The holder of the @Carol_Peaslee account could not be reached for comment. The account has blocked direct messages. And a voice message left with a phone number associated with a person named Carol Peaslee in Wisconsin was not returned.

Biden meme launched, spreads

On March 3, just before the nation’s attention zeroed in on battling COVID-19, Joe Biden swept 10 out of 14 Super Tuesday states and became the frontrunner for the Democratic presidential nomination.

But the former vice president’s victory also made him a bigger target than he had been before: Even before he was declared the presumptive nominee, numerous pages and accounts on Facebook and Twitter were circulating a meme implying that Biden was senile.

“I’m Joe Biden, and I forgot this message,” it read, under an image of Biden looking into the camera that had been captured from his campaign announcement video. The meme was shared widely on pro-Trump pages as well as in pro-Sen. Bernie Sanders and anti-Biden groups, where it was seen, liked and shared thousands of times.

On Twitter, an account allegedly based in Wisconsin, @WiCheesehead1, shared the “I’m Joe Biden, and I forgot this message” meme. The same account has shared a variety of fabricated and hyper-partisan content, including a meme that used Biden campaign branding with a fake image of Hunter Biden, the candidate’s son, at a strip club. The account has also promoted an unsubstantiated theory linking the coronavirus outbreak to the buildout of 5G networks. The holder of that account also could not be located.

Although they can be entertaining, political memes that take aim at candidates are often examples of disinformation — false information that is intentionally spread to cause harm.

Biden, of course, never says “I forgot this message” in his campaign announcement. And while many people who saw the meme probably interpreted the text as a humorous dig rather than a literal quote, they nonetheless may have had second thoughts about Biden’s mental fitness for the presidency after seeing it, potentially tamping down turnout for Biden in later contests.

The meme’s content also insidiously meshed with one of Russia’s goals in its ongoing information war against America — discouraging political participation.

That is also one of the primary goals of Russian “sock puppets“: accounts that post under false identities and pretend to be Americans, according to Josephine Lukito, who studied disinformation as a doctoral candidate in the UW-Madison journalism school. Lukito’s work on the accidental amplification of Russian disinformation by the mainstream media leading up to the 2016 U.S. election was cited in the Mueller report on Russian election interference.

When such content is accidentally amplified by real people who are unaware of its origins, it becomes misinformation. Misinformation is false information that it is not necessarily meant to deceive, but is spread across the internet by people who believe it to be real.

The implications of dis- and misinformation for democracy are far-reaching. Lukito says disinformation is spread by a variety of foreign and domestic actors — not just Russians — with the goal of hurting adversaries, encouraging resentment and division and sowing distrust in the media, which play an important role in delivering factual information to the public.

Memes simple, effective

Because of their popularity and simplicity, memes are a great way to accomplish these goals.

Creating a meme is often as simple as taking a widely available photo, pasting false or misleading text over it and posting it on social media. (Not all memes spread bad information: Consider the power of “flatten the curve” in persuading Americans to stay at home to curb the spread of the coronavirus.)

In the case of the Biden meme, an early version had a watermark from a Facebook community called Americans for Liberty, which published the meme a few hours before polls began closing on Super Tuesday. Americans for Liberty — which posts libertarian and anti-left content and uses an image of the Declaration of Independence and U.S. Constitution as its cover photo — did not respond to a request for comment from Wisconsin Watch.

Every day, dozens of similar pages and accounts pump divisive content into the social media stream, providing few clues as to who is behind the messaging. Like Americans for Liberty, they often have scant “about” pages, generic profile pictures and post inflammatory content. All of those criteria are red flags, according to Lukito. If there is no clear contact information for a group or organization, it is a sign that “they don’t want to be seen,” she says.

Instagram users posing as Americans

Kim, the UW-Madison journalism professor, conducted a review of dozens of Instagram accounts linked to Russia, describing her findings in an article on the Brennan Center website.

“Its trolls have gotten better at impersonating candidates and parties, more closely mimicking logos of official campaigns,” she wrote. “They have moved away from creating their own fake advocacy groups to mimicking and appropriating the names of actual American groups.”

The Internet Research Agency is a St. Petersburg-based organization with ties to Russian oligarchs and the Kremlin that has played a key role in Russia’s active disinformation campaign against the United States.

Facebook estimates that 126 million Americans may have been exposed to IRA content on their platform between 2015 and 2017, and IRA content received 187 million engagements on Instagram in the form of likes, comments and shares, according to a U.S. Senate Intelligence Committee report.

Kim says the current IRA effort sometimes takes on a Trojan horse-style approach, in which innocuous content is used to draw in followers who can later be audiences for — and spreaders of — disinformation.

“They’ve increased their use of seemingly nonpolitical content and commercial accounts, hiding their attempts to build networks of influence,” she wrote.

Among online forms of voter suppression, Kim noted that one has been particularly prevalent during the current election cycle: same-side candidate attack, which seeks to make a candidate within a party unacceptable to voters aligned with that party. Anti-Biden memes like the one posted by Americans for Liberty are examples of same-side candidate attacks when they get picked up by Sanders supporters.

In some of those instances, left-leaning individuals could accidentally amplify anti-Biden content which they do not realize is Russian, delegitimizing Biden’s candidacy at the risk of lowering Democratic turnout and giving President Trump a boost in November.

‘I just ignore that kind of stuff’

It can be easy to get lost in what feels like a deluge of partisan rancor online. So, what should ethical social media users do?

One answer is to avoid liking or sharing suspicious or inflammatory content, according to Lee Rasch, the executive director of LeaderEthics-Wisconsin, a La Crosse, Wisconsin-based nonprofit that promotes integrity in American democracy.

This is especially important given that in 2016, the Russians were intent on sowing division, with many of their strategies “specifically aimed at really pushing at U.S. buttons in terms of extreme language … intentionally trying to get people riled up and angry at each other,” according to Lukito.

Rasch spends time commenting on his friends’ posts, warning them of questionable sources posting content with the purpose of dividing people. He says this is just one of the strategies everyday people can practice to prevent the spread of disinformation.

“You find our confidence in government, our trust in government is really eroding. But what’s underlying that is people have greater concerns that they don’t know what’s true or false … And so the integrity of our democracy is (in danger) if we allow that to go unchecked in some way,” Rasch says.

He adds, “We need to help the average citizen as much as we can because if people feel powerless, they’re (either) not going to … vote or they may feel that their voting is irrelevant.”

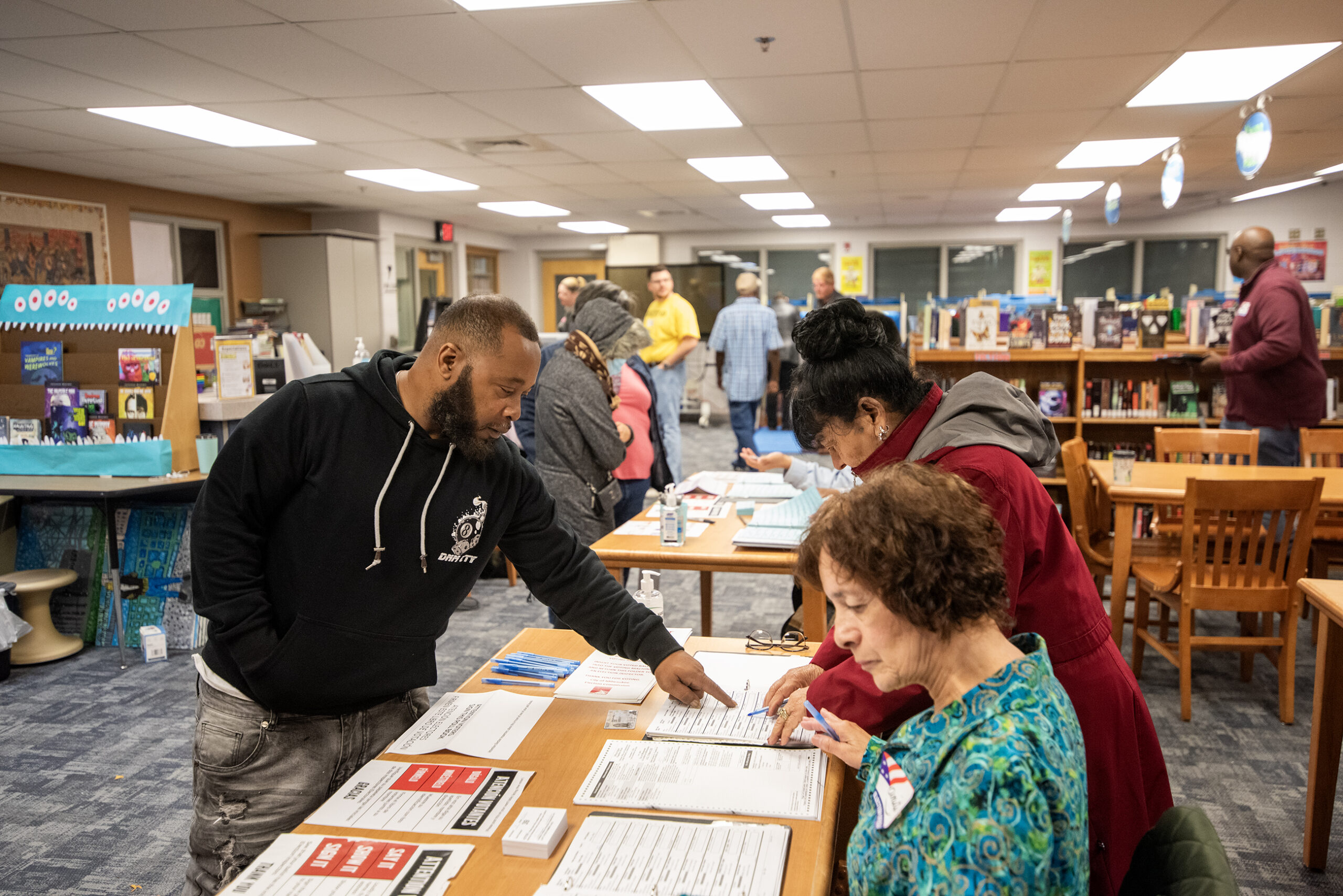

Some Wisconsin residents, like Patti Metcalf, have decided to take defense against disinformation a step further by participating in discussions about how to identify and avoid malicious content.

This spring, Metcalf participated in a workshop called “How ‘Fake’ is the News?” led by Wisconsin Watch board member Jack Mitchell, a retired UW-Madison journalism professor and long-time director for Wisconsin Public Radio. Metcalf is retired — and she is a savvy Facebook user.

“I just ignore that kind of stuff,” Metcalf says of content like the Biden meme. “There was one that somebody sent about the Muslim immigrants — ‘This whole shipment of arms was found in their luggage,’ right? And it was nowhere else … and I (commented) ‘Hey, guys, be skeptical. This is Russian trolls!’”

Misinformation: It’s on us

The fact that inflammatory content receives any engagement at all places some of the onus to stop the spread of that content on everyone who engages online, says David Becker, an expert on election administration and disinformation. Becker leads the Center for Election Innovation and Research, a Washington, D.C.-based organization that aims to ensure that elections are secure. His background includes election work at The Pew Charitable Trusts.

“Collectively all of us have accepted responsibility for the fact that we allow this disinformation to work — that we have allowed Facebook to evolve into this platform where they actually make money by allowing us to share garbage with each other,” Becker says.

Despite misinformation aimed at discouraging some Wisconsinites from voting, Becker is optimistic that turnout in the state will be high in 2020. But he says it is up to voters to think critically about their sources of information before they head to the polls.

“I still am a big believer in the idea that the antidote to bad information is good information, and a big believer in the First Amendment,” Becker says. “But I’d be the first to admit that that paradigm has been challenged significantly.”

Reporter Howard Hardee contributed to this report, which was produced as part of an investigative reporting class at the University of Wisconsin-Madison School of Journalism and Mass Communication under the direction of Wisconsin Watch Managing Editor Dee J. Hall. Wisconsin Watch’s collaborations with journalism students are funded in part by the Ira and Ineva Reilly Baldwin Wisconsin Idea Endowment at UW-Madison. The nonprofit Wisconsin Watch (wisconsinwatch.org) collaborates with WPR, PBS Wisconsin, other news media and the UW-Madison School of Journalism and Mass Communication. All works created, published, posted or disseminated by Wisconsin Watch do not necessarily reflect the views or opinions of UW-Madison or any of its affiliates.