Wisconsin is in a mental health crisis, with a significant shortage of psychiatrists and people waiting six to nine months for mental health services like counseling. Can artificial intelligence fill a health care gap?

WPR’s “Wisconsin Today” recently spoke with two local mental health care professionals who are embracing artificial intelligence in Wisconsin’s mental health care system while, they say, keeping ethics and patient safety at top of mind.

AI and assessing therapists

Stay informed on the latest news

Sign up for WPR’s email newsletter.

Before becoming a licensed therapist, Heather Hessel had a career in information technology for more than 25 years.

Today, she is a licensed marriage and family therapist and an assistant professor of counseling, rehabilitation and human services at the University of Wisconsin-Stout. She recently received an award for her research of AI and therapy.

Hessel said, as an educator, the most interesting use of AI for mental health is how it can be used to improve the work of therapists. For instance, some AI programs can record a therapy session and provide feedback.

“Once a therapist gets licensed, they’re no longer under supervision,” Hessel said, adding that sessions are private and therapists don’t really get performance reviews the way other professionals do.

“Even though we have continuing education, there’s still this question of ‘Are we really improving ourselves as therapists?’” she said. “So, I’d like to see over the next decade a real push towards helping therapists become better therapists through the use of AI.”

Hessel stressed that therapy sessions are always confidential and any systems therapists use must be secure and comply with the Health Insurance Portability and Accountability Act, or HIPAA.

Hessel said AI is also being used as a teaching tool for therapists in training. LiNARiTE.AI is an AI program that allows students to roleplay with a virtual client. The student can even specify the client’s problems and mood.

Hessel said although these AI programs are simulations, it gives students the ability to practice skills and gain confidence.

AI and big data sets

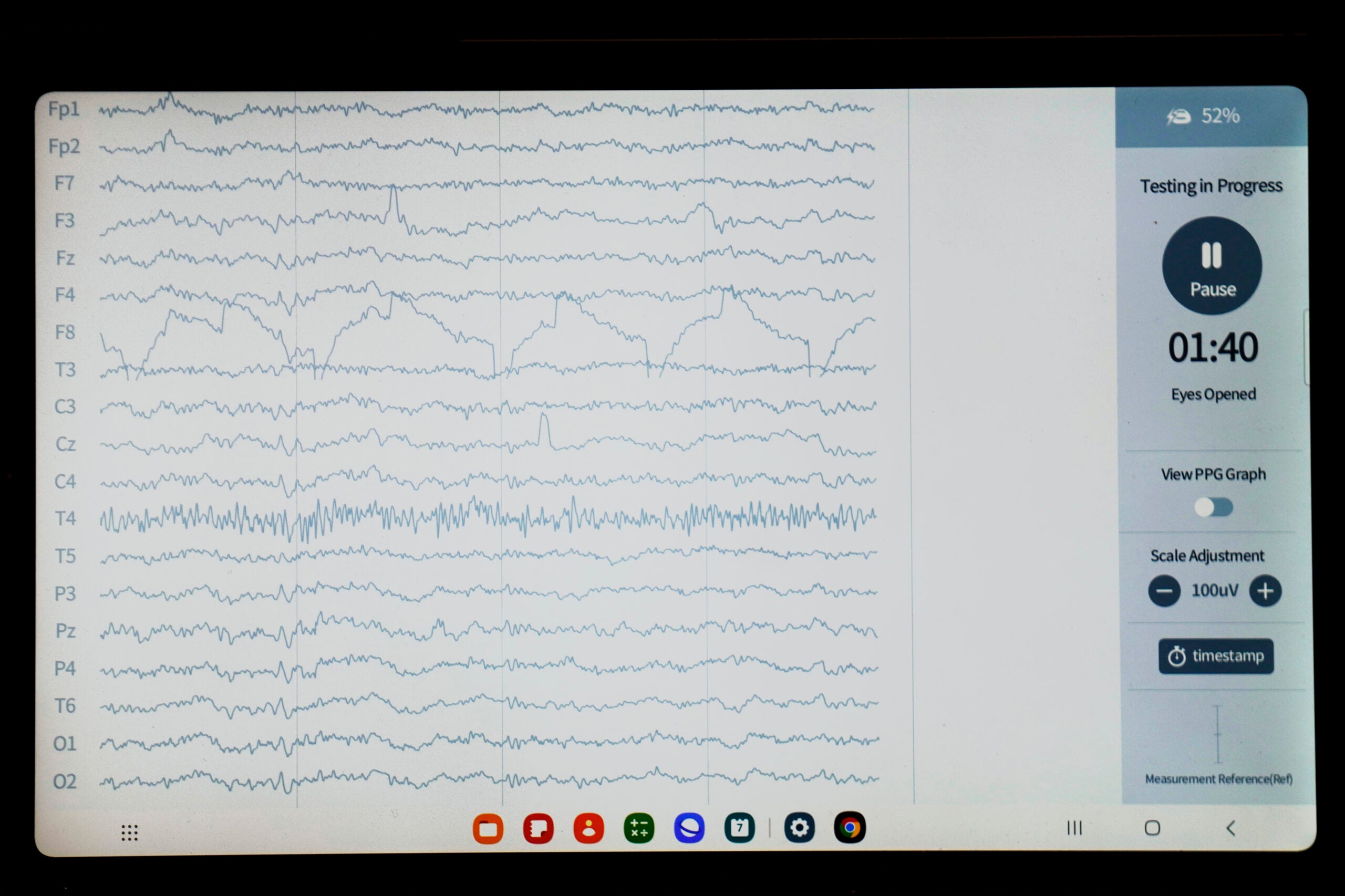

Another use of AI in mental health care is deploying machine learning algorithms to analyze large datasets. AI can search medical information such as patient history, imaging scans and lab results to identify patterns.

Rogers Behavioral Health, which has multiple locations in Wisconsin, works with people experiencing mental health emergencies such as addiction or suicidal ideation. It’s one of the few behavioral health care facilities utilizing AI, Brian Kay from Rogers said in a press release.

Kay told “Wisconsin Today” they’ve used AI to find patterns in how people respond to different treatments and to identify risk factors for people who might be considering suicide.

Kay said by using large data sets to look at behavior factors in suicide, they found sleep disturbance was highly correlated with suicide risk. They then changed their inpatient treatment programing to address lack of sleep.

“There is no human on this planet that’s able to predict and identify patterns with hundreds of thousands of variables,” Kay said. “That’s where AI can come in and be very good at understanding those patterns. But it requires the clinician to interpret what those patterns are, interpret what’s meaningful, and then, more importantly, interpret what’s modifiable.”

Ethical concerns top of mind

Although Hessel and Kay are excited about the growing opportunities AI can offer mental health care providers and patients, there are ethical concerns.

They agree that patient-facing apps and therapy chatbots are limited. Kay stressed that AI shouldn’t replace clinicians. And Hessel said therapeutic chatbots aren’t very good, although they will likely get better.

“I do think [therapeutic] chatbots can present a level of risk that we need to be really careful and cautious about,” Hessel said.

As an example in June 2023, NPR reported on Tessa, a chatbot originally designed by researchers to help prevent eating disorders, giving some users dieting advice. That’s something advocates say can simply fuel the eating disorder.

But one of the largest issues with AI in mental health care is going to be bias in data sets, they said.

A recent study by Yale University of Medicine showed bias can emerge as AI collects data and trains models. The study said biases in AI algorithms are not just possible; they are a significant risk if not actively countered.

The risk being AI could affect health care for certain groups of people.

“We work as therapists really hard to identify our own implicit biases when we’re working with clients who are very different from ourselves, and we wouldn’t want our own biases to be reinforced by an AI system,” Hessel said.

Also, as Hessel’s students highlight, use of AI has a huge environmental impact.

According to the United Nations Environment Programme, AI data centers produce electronic waste, use an immense amount of water to cool the electrical components and use significant amounts of energy to complete AI tasks.

A request made through ChatGPT, an AI-based virtual assistant, requires 10 times the electricity of a Google Search.

So as these mental health care professionals increasingly incorporate artificial intelligence into their practices, they acknowledge the potential but hope to maintain the human touch in mental health care.

Wisconsin Public Radio, © Copyright 2025, Board of Regents of the University of Wisconsin System and Wisconsin Educational Communications Board.